Diving into Mathematical Logic brought me across Peirce’s Law, which uses the logical implication ⇒ operator only and is always True irrespective of what its two inputs (say) P and Q are:

((P ⇒ Q) ⇒ P) ⇒ PTower of N-place implicational tautologies

This got me thinking about the possibility of logical formulae that only use the logical implication ⇒ operator and are always True for any number of inputs.

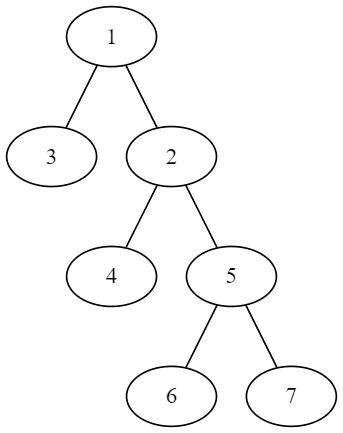

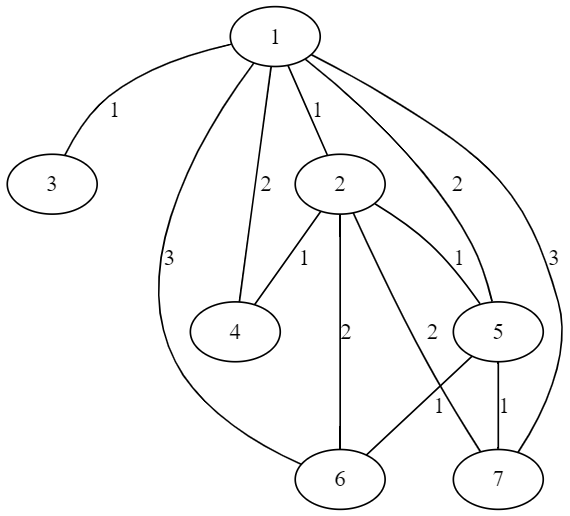

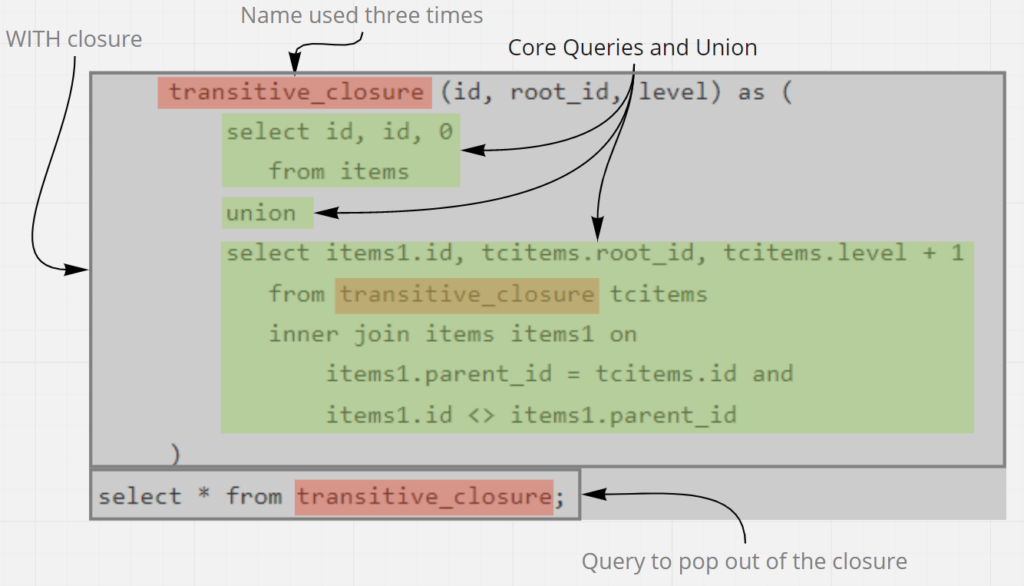

A Hierarchy of Laws

I investigated this with some Python code and it turns out that there is a hierarchy of Laws:

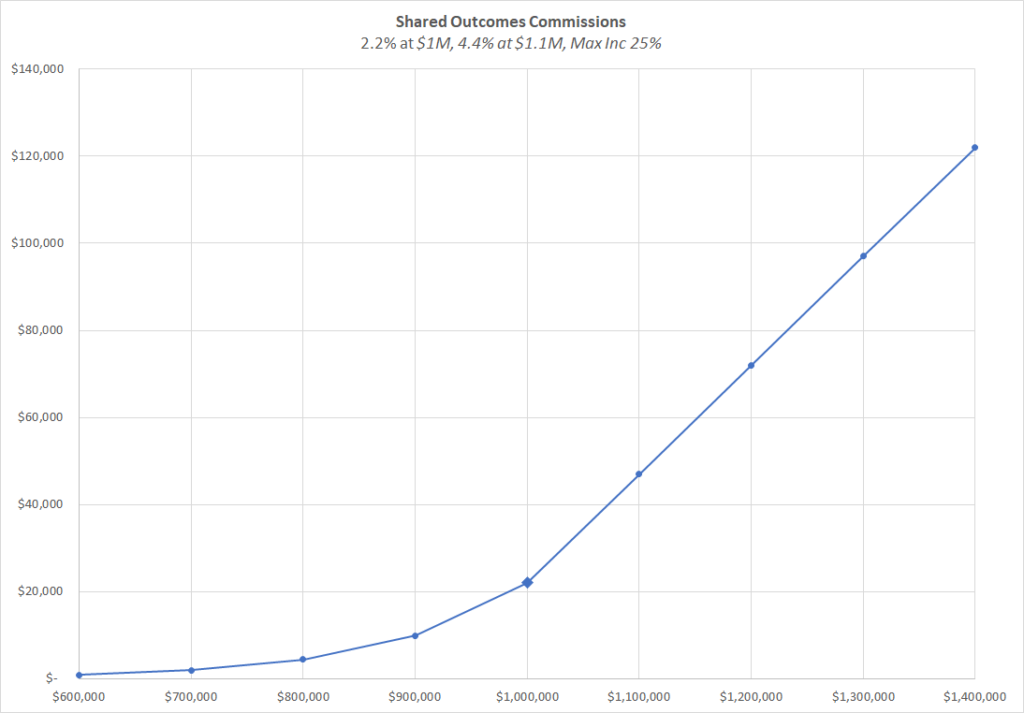

| Number of Variables – Arity | Variables | Number of Shortest Tautologies | Shortest Tautologies (Unique up to variable renaming) | Comments |

|---|---|---|---|---|

| 0 | – | 1 | True | |

| 1 | P | 1 | P ⇒ P | |

| 2 | P, Q | 2 | P ⇒ (Q ⇒ P) P ⇒ (Q ⇒ Q) | Peirce’s Law is just one of sixteen two-variable tautologies with three ⇒ operators: P ⇒ ((P ⇒ Q) ⇒ P) P ⇒ (P ⇒ (Q ⇒ P)) (P ⇒ Q) ⇒ (P ⇒ Q) P ⇒ (Q ⇒ (P ⇒ Q)) (P ⇒ Q) ⇒ (Q ⇒ Q) P ⇒ (Q ⇒ (Q ⇒ Q)) ((P ⇒ Q) ⇒ P) ⇒ P P ⇒ ((Q ⇒ P) ⇒ P) (P ⇒ Q) ⇒ (P ⇒ P) P ⇒ (Q ⇒ (P ⇒ P)) P ⇒ ((Q ⇒ Q) ⇒ P) P ⇒ (Q ⇒ (Q ⇒ P)) ((P ⇒ P) ⇒ Q) ⇒ Q P ⇒ ((P ⇒ Q) ⇒ Q) (P ⇒ P) ⇒ (Q ⇒ Q) P ⇒ (P ⇒ (Q ⇒ Q)) |

| 3 | P, Q, R | 5 | (P ⇒ Q) ⇒ (R ⇒ R) P ⇒ (Q ⇒ (R ⇒ R)) P ⇒ (Q ⇒ (R ⇒ Q)) P ⇒ ((Q ⇒ R) ⇒ P) P ⇒ (Q ⇒ (R ⇒ P)) | |

| 4 | P, Q, R, S | 16 | P ⇒ (((Q ⇒ R) ⇒ S) ⇒ P) P ⇒ ((Q ⇒ (R ⇒ S)) ⇒ P) P ⇒ (Q ⇒ ((R ⇒ S) ⇒ P)) P ⇒ ((Q ⇒ R) ⇒ (S ⇒ P)) P ⇒ (Q ⇒ (R ⇒ (S ⇒ P))) ((P ⇒ Q) ⇒ R) ⇒ (S ⇒ S) (P ⇒ (Q ⇒ R)) ⇒ (S ⇒ S) P ⇒ ((Q ⇒ R) ⇒ (S ⇒ S)) (P ⇒ Q) ⇒ (R ⇒ (S ⇒ S)) P ⇒ (Q ⇒ (R ⇒ (S ⇒ S))) (P ⇒ Q) ⇒ (R ⇒ (S ⇒ R)) P ⇒ (Q ⇒ (R ⇒ (S ⇒ R))) P ⇒ (Q ⇒ ((R ⇒ S) ⇒ Q)) P ⇒ (Q ⇒ (R ⇒ (S ⇒ Q))) | |

| 5 | P, Q, R, S , T | 42 | … | |

| 6 | P, Q, R, S, T, U | 132 | … |

Reader’s feedback is welcome!